Introduction

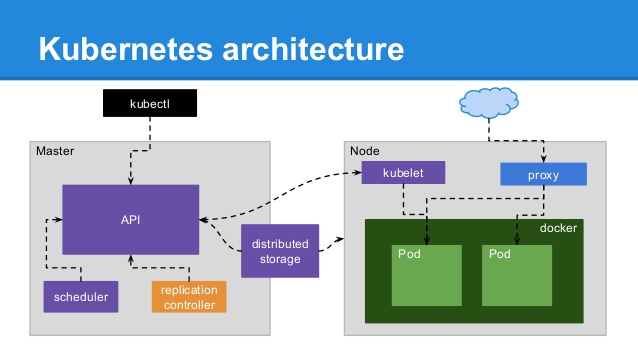

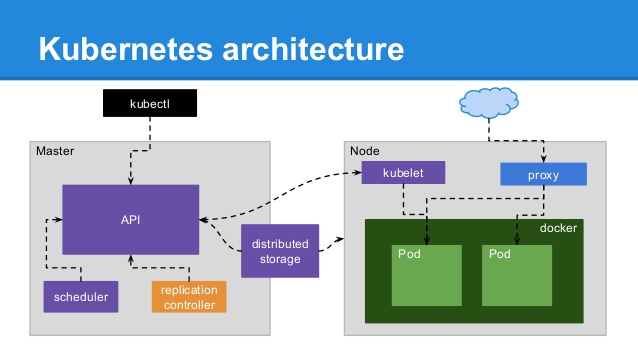

Kubernetes is an open source production grade container orchestration system for deploying and managing docker/container applications. There are managed kubernetes orchestration service providers like Amazon Elastic Container Service for Kubernetes (EKS), Azure Kubernetes Service (AKS) etc.

kubectl

Kubernetes cluster users can perform management tasks using kubectl binary which talks to API Server. Example kubectl commands

# display pod resource

kubectl get pods -n my_namespace

# Execute a command in a container

kubectl -n my_namespace exec -it pods_name -- sh

# Listen on ports 5000 and 6000 locally, forwarding data to/from ports 5000 and 6000 in the pod

kubectl -n my_namespace port-forward pod/mypod 5000 6000

# Get output from ruby-container from pod my-pod-pd

kubectl attach my-pod-pd -c ruby-container

|

| kubectl execution flow (source: 1ambda.github.io) |

kubelet

kubelet, kube-proxy run's on each compute node (VM, Worker, EC2 Instance etc), kubelet listens on TCP port 10250 and 10255 (with no authentication/authorization). API Server acts as Reverse Proxy to kubelet and API Aggregation. API Server connects to the kubelet to fulfill commands like exec, port=forward and opens a websocket connection which connects stdin, stdout, or stderr to user’s original call [01].

API Aggregation

Installing or writing additional API's into Kubernetes API Server i.e. extending core API Server

Vulnerability

Vulnerability is in Kubernetes API Server, crafted request can execute arbitrary commands on the backend servers (pods) through the same channel client established to backend through API Server [02]

Check nodes Kubernetes version

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

pd-worker-01 Ready node 13d v1.12.3 10.250.0.6 Container Linux by CoreOS 1745.7.0 (Rhyolite) 4.14.48-coreos-r2 docker://18.3.1

pd-worker-02 Ready node 13d v1.12.3 10.250.0.5 Container Linux by CoreOS 1745.7.0 (Rhyolite) 4.14.48-coreos-r2 docker://18.3.1

pd-worker-03 Ready node 13d v1.12.3 10.250.0.4 Container Linux by CoreOS 1745.7.0 (Rhyolite) 4.14.48-coreos-r2 docker://18.3.1

Vulnerable API Servers

If API server response looks as bellow and using vulnerable API versions of Kubernetes the you are vulnerable using anonymous-user escalation, patch Kubernetes immediately.

HTTP response error code 403 indicates Forbidden i.e. related to

Authorization implies we successfully passed through

Authentication phase.

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/api/v1/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

}

anonymous user

By default, requests to the kubelet’s HTTPS endpoint that are not rejected by other configured authentication methods are treated as anonymous requests, and given a username of

system:anonymous and a group of

system:unauthenticated.

Mitigations

There are three levels of escalation mitigations

1. anonymous user -> aggregated API server

API Server admission-controller parameter anonymous-auth is set to fault

$ kubectl get po kube-apiserver-01 -n prod -o yaml | grep -i "anonymous-auth" - --anonymous-auth=false

$ kubectl get po kube-apiserver-01 -n stage -o yaml | grep -i "anonymous-auth" - --anonymous-auth=false

2. authenticated user -> aggregated API server

Suspend aggregated API servers usage

3. authorized pod exec/attach/portforward -> kubelet API

Remove pod exec/attach/portforward permissions for users

References

[01]. https://docs.openshift.com/container-platform/3.11/architecture/networking/remote_commands.html

[02]. https://docs.openshift.com/container-platform/3.11/architecture/networking/remote_commands.html

[03]. https://elastisys.com/2018/12/04/kubernetes-critical-security-flaw-cve-2018-1002105/

[04]. https://github.com/kubernetes/kubernetes/issues/71411